I’m writing a little program to build photo mosaics. You give it a directory, and an image, and it uses all the images in the directory to build the the target image. The target images I’m looking at mostly have a resolution of 3288x2466, so I scale my library of individual image cells to 24x18, so the output image will consist of 137x137 blocks. However, the code is fairly flexible, and I’ve tested it with some other dimensions.

The first thing I had to figure out when doing this was how to compare the two images. The basic principle I use to match the images to the pattern is very similar to the metric I used for the median filter implementation I talked about in my last programming post. I pick an arbitrary threshold, then compare the red, green, and blue values for each one. I’m working with 24-bit color, so each color channel has eight bits, and can hold a value from 0-255, 0 being black, and 255 being white. If any of the color channels differ by more than the threshold, I mark that the pixels do not match. So that gives me a value for individual pixels, but we’re looking to compare an entire image.

To evaluate whether or not a given cell fits in a given position, I simply count the number of pixels that match between the target image and the cell. Some testing showed that it did a pretty good job, so I went on to the next piece. (Though I may have cheated a bit by comparing an image with itself run through the median filter, given the similarity between the filter’s method and this one.)

The next step is to actually figure out which images go best where. Initially I decided that an exhaustive search would take far too long, and wrote a genetic algorithm which really performed poorly, and could take a good 10 minutes to do anything, after which it petered out with only about 30% of its pixels matching. And this was on a ridiculously contrived example I threw together in Kolourpaint, using a set of monochrome images I generated with a quick Perl script.

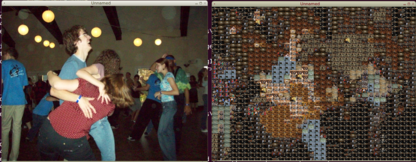

So the genetic algorithm was not working very well. I took stock of the situation, admonished myself that premature optimization is the root of all evil, and wrote a brute force method which output this. It took more time to read in and scale the images than it did to find an optimal solution.

So I compiled with -O3 optimization, and ran it on a library of 726 input images and an aforementioned 3288x2466 target image, the program gave a decent time of:

real 2m38.196s

user 2m24.890s

sys 0m12.960sJust under 3 minutes, not too shabby. The output is not phenomenal, but it’s somewhat recognizable as the original image. Here’s one smaller one (this one took about 20 seconds once it had read in and scaled the cell images.):

I set out to see how far I could get just playing around without looking at any literature, and I’ve come a pretty decent way. There’s definitely some room for improvement. The brute force (technically greedy) solution isn’t really going to scale very well past a few thousand images. And judging from that, I either need a lot more images, or I need to rethink how I’m comparing them. Possible comparison improvements:

- Drop RGB for a colorspace that makes it easier to compare colors that “look alike” to humans.

- Allow for some pixel shifting. To some extent I’m doing this already. I use pixel averaging to scale down the images, so there has already been a fair amount of what might be called ‘noise reduction’ done to the images.

- Change the repeat penalty. That example didn’t penalize repeats. Now I’ve switched to a method that divides the match percentage by the number of times it’s been used plus one. I’ll post more pictures once I’ve worked out a few more of the bugs. There’s a double free or corruption error that keeps cropping up.

The code is available on Github., though it’s still a little rough.